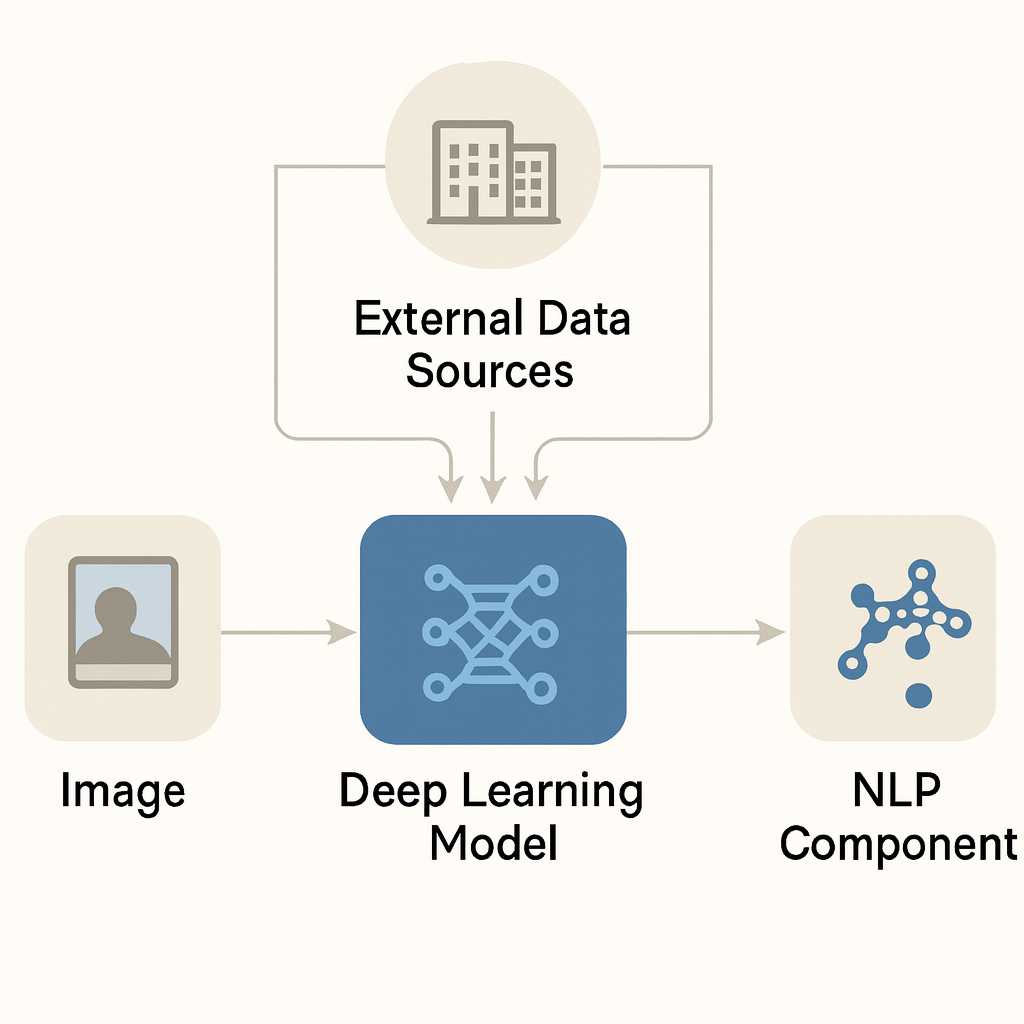

I was responsible for the entire technical concept, feasibility study, and client communication. Over the course of the project, I developed a deep learning–based system for automated image captioning.

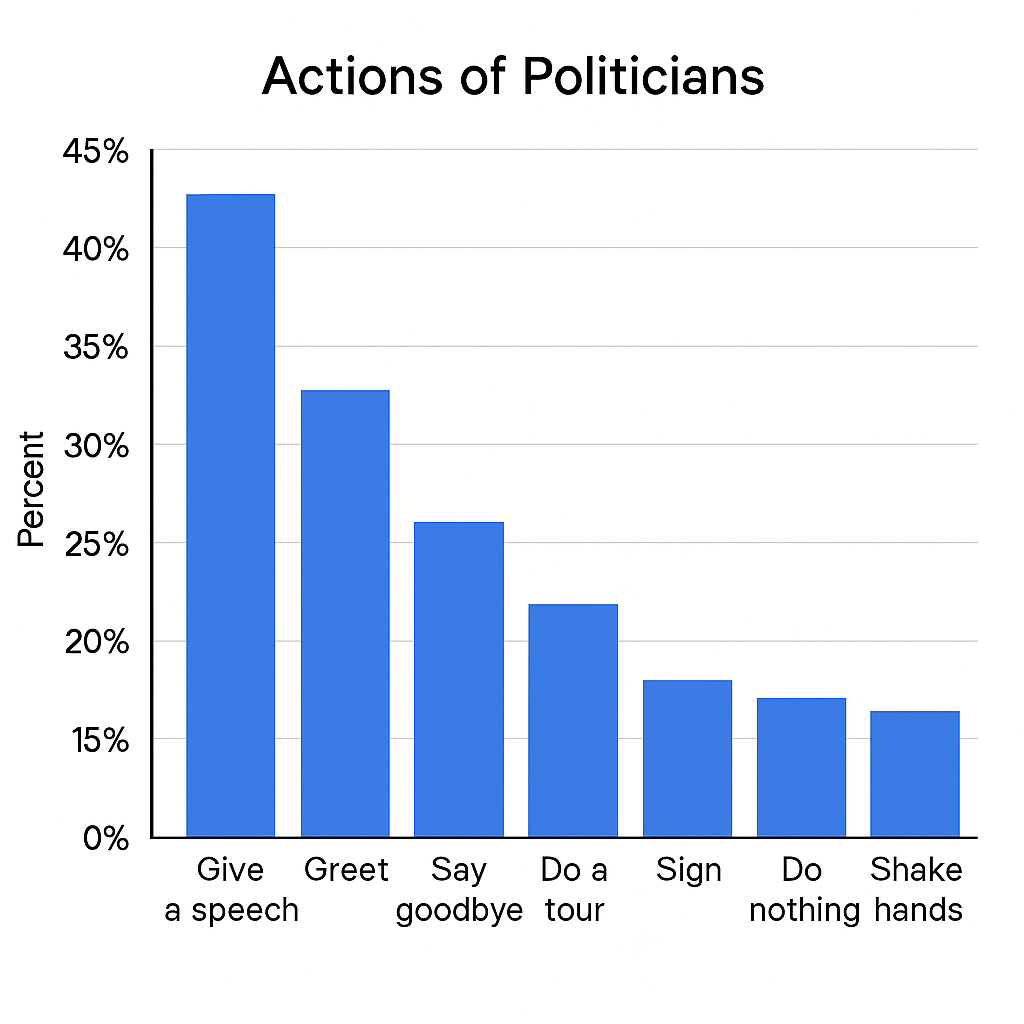

The main challenge was to combine multiple data sources — including the image itself — into a single grammatically correct German sentence describing what a person was doing in the picture. Since the target group consisted of politicians, we quickly discovered that 98% of all images depicted only seven distinct actions.

By applying transfer learning from an existing computer vision model originally trained to classify sports actions, we achieved excellent results. A substantial amount of NLP processing was then required to generate the final sentence — not only describing the action but also including the politician’s name, location, and occasion, all extracted from various external data sources.

It’s worth emphasizing that this work was done before powerful LLMs became available, meaning that such captioning capabilities had to be built manually and from scratch.

The outcome was a system that exceeded client expectations and demonstrated the real potential of AI-driven automation in public image documentation.

Due to confidentiality reasons, I’m unable to showcase the actual system. The images shown here are AI-generated and do not represent the real project.